|

Contact University of Pennsylvania Levine 402 myatskar@cis.upenn.edu |

I am an Assistant Professor at University of Pennsylvania in the department of Computer and Information Science.

I did my PhD at University of Washington co-advised by Luke Zettlemoyer and Ali Farhadi.

I was a Young Investigator at the Allen Institute for Artificial Intelligence for several years working with their computer vision team, Prior.

My work is interdisciplinary, spanning Natural Language Processing, Computer Vision, and Fairness in Machine Learning.

I received a Best Paper Award at EMNLP for work on gender bias amplification (Wired article).

My research broadly explores how language can be used to structure visual perception. I work on machine learning approaches that enable tight coupling between how people express

themselves in language and how machine behavior is specified. A central thread in my research is trying to understand how machine learning systems

inherit human bias. My lab currently explores two main research themes around expanding the abilities artificial intelligence systems:

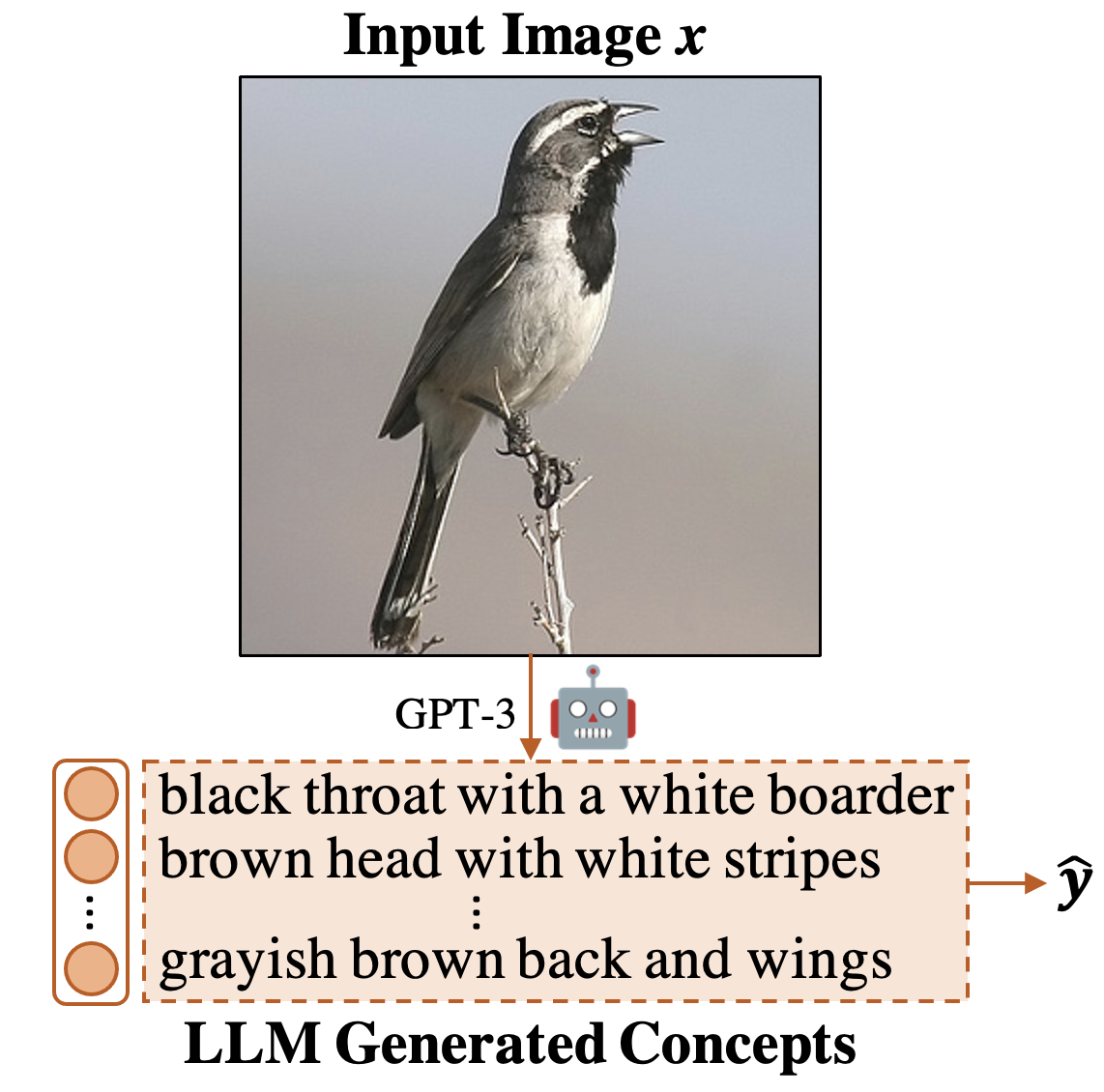

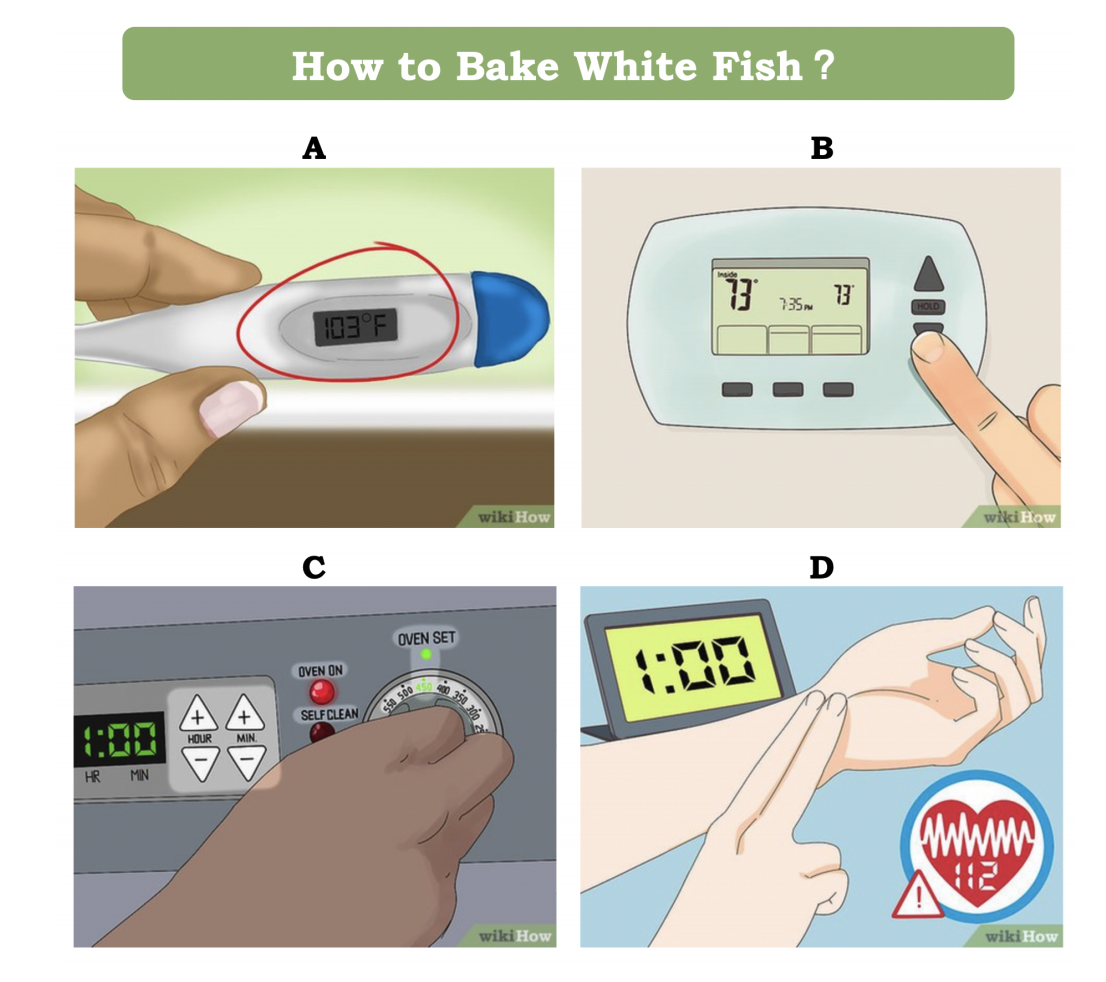

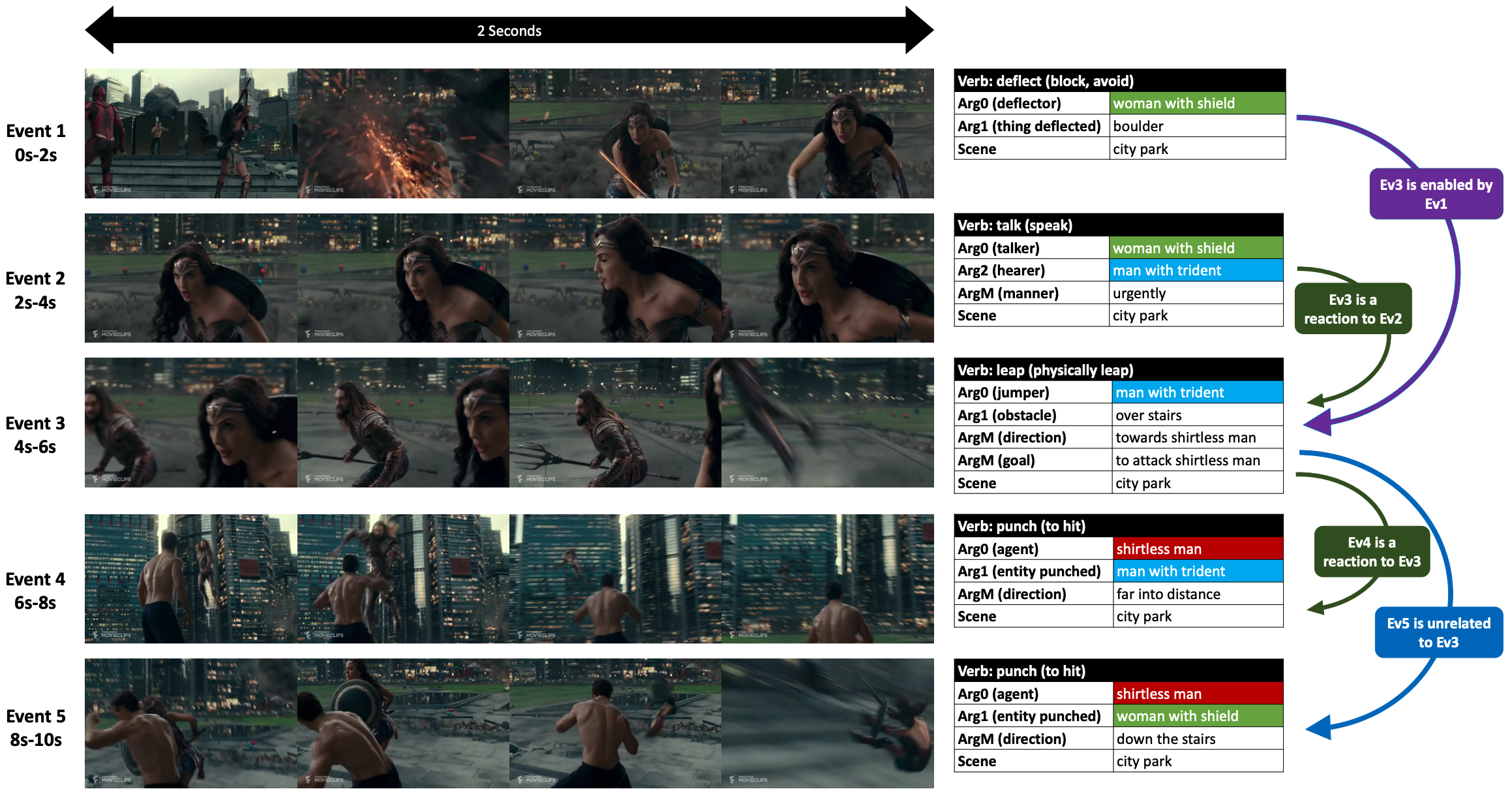

Natural language is an effective human tool for communicating important world knowledge. This knowledge can be extracted, and used to create explict priors for how visual recognition systems need to behave. Such systems can be more data-efficient, interpretable, and capture a wider range of human abilities. Recent Projects:

| Language Model Guided Bottlenecks | Goal-Step Inference using Wikihow | Situations in Video |

|

|

|

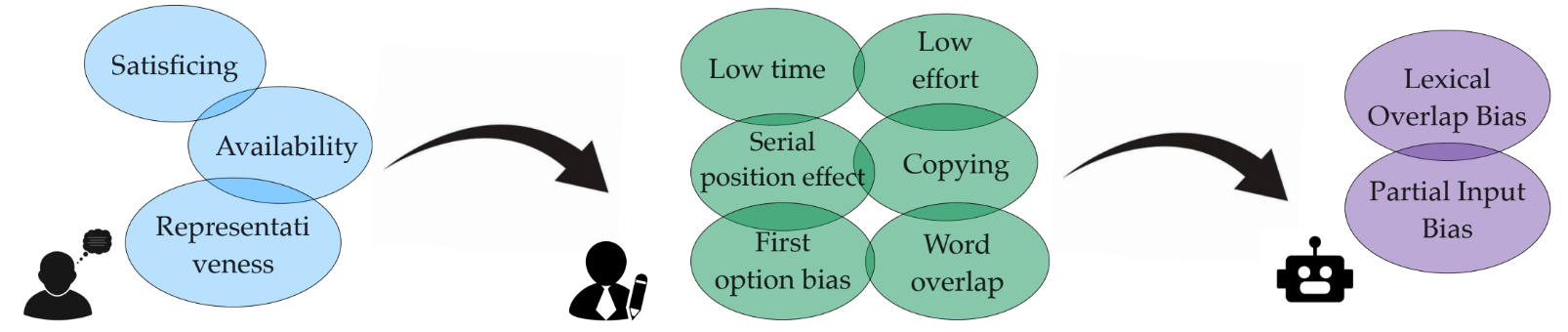

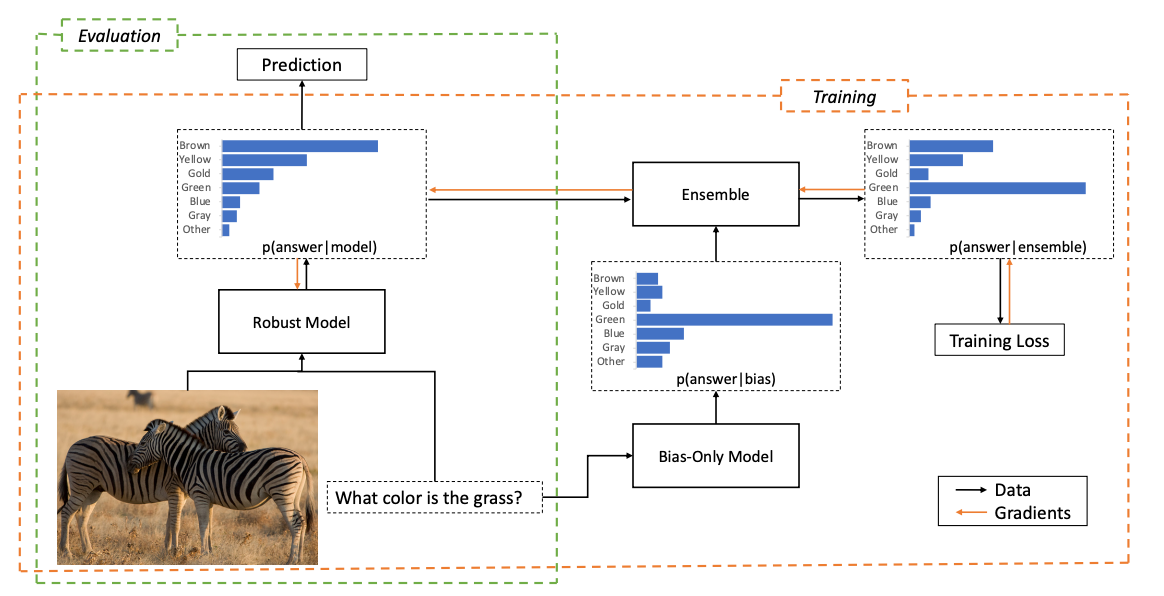

Machine learning systems depend on human specification through explict annotation, collected data, and model design. In all parts of this process, people may unknownlingly bias systems and cause them to be brittle. In such cases, systems may fail to generalize given distribution shift, or cause the model to make gender biased predictions when models are uncertain. It is important to characterise and control how human biases are transfered to machine learning systems. Recent Projects:

| Annotator Cognative Heuristics | Ensembles for Reducing Dataset Bias | Gender Bias Amplification |

|

|

|

PhD Students

- Chaitanya Malaviya (w/ Dan Roth)

- Yue Yang (w/ Chris Callison-Burch)

- Weiqiu You

- Artemis Panagopoulou (w/ Chris Callison-Burch)

Masters Students

Alumni

- Daniel Kim (Undergraduate to UW PhD )

- Lucy Yuewei Yuan (Undergraduate to Meta)