EvAC3D From Event-based Apparent Contours

to 3D Models via Continuous Visual Hulls

Abstract

3D reconstruction from multiple views is a successful com- puter vision field with multiple deployments in applications. State of the art is based on traditional RGB frames that enable optimization of photo-consistency cross views. In this paper, we study the problem of 3D reconstruction from event-cameras, motivated by the advantages of event-based cameras in terms of low power and latency as well as by the biological evidence that eyes in nature capture the same data and still perceive well 3D shape. The foundation of our hypothesis that 3D- reconstruction is feasible using events lies in the information contained in the occluding contours and in the continuous scene acquisition with events. We propose Apparent Contour Events (ACE), a novel event- based representation that defines the geometry of the apparent contour of an object. We represent ACE by a spatially and temporally continu- ous implicit function defined in the event x-y-t space. Furthermore, we design a novel continuous Voxel Carving algorithm enabled by the high temporal resolution of the Apparent Contour Events. To evaluate the performance of the method, we collect MOEC-3D, a 3D event dataset of a set of common real-world objects. We demonstrate EvAC3D's ability to reconstruct high-fidelity mesh surfaces from real event sequences while allowing the refinement of the 3D reconstruction for each individual event.

Video

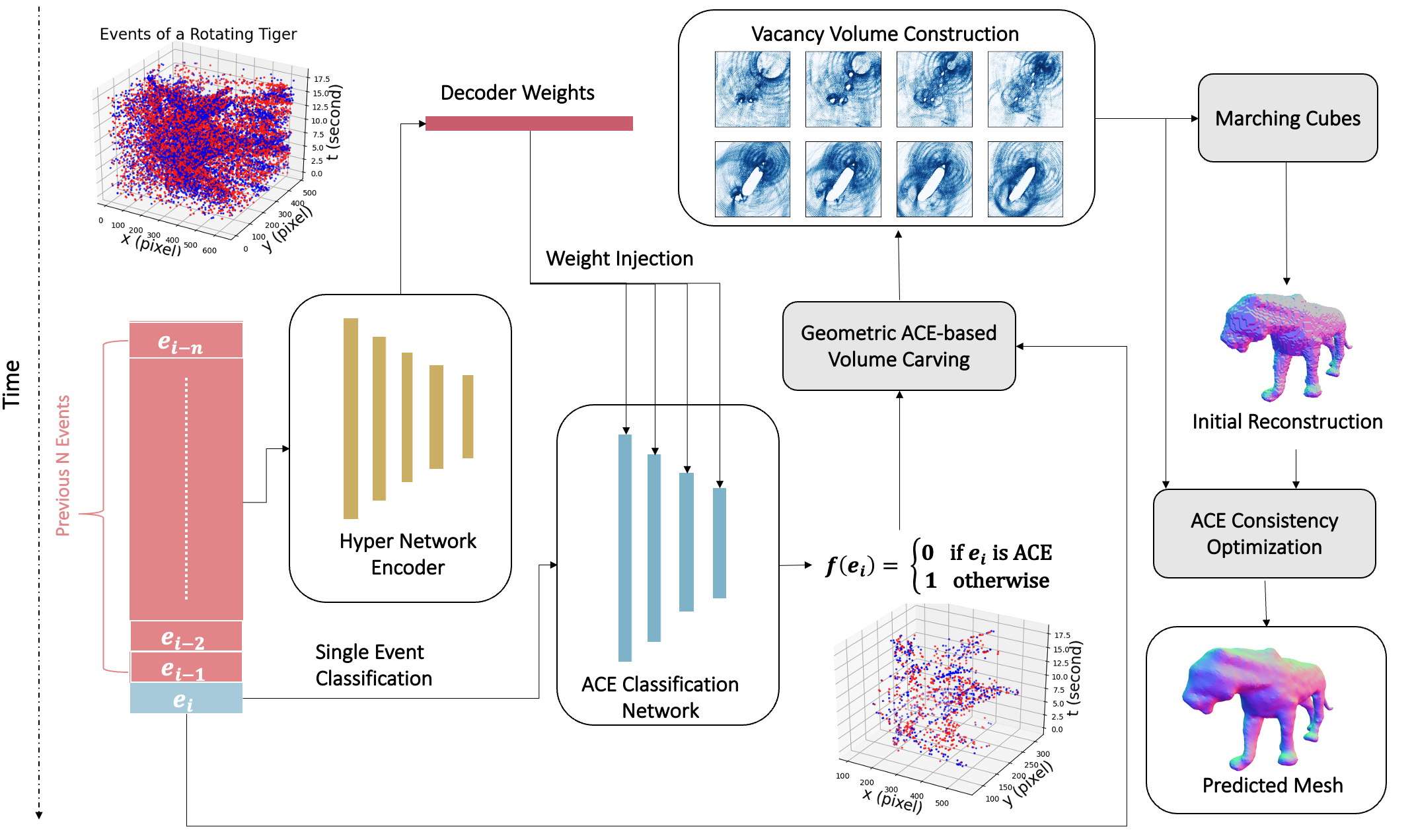

Method Overview

Apparent Contour Event (ACE)

The main challenge in reconstructing objects from events is finding the appro priate geometric quantities that can be used for surface reconstruction. In frame- based reconstruction algorithms, silhouettes are used to encode the rays from the camera center to the object. However, computing silhouettes requires integrating frame-based representations, which limits the temporal resolution of the recon struction updates. Additionally, since events represent the change in log of light intensity, events are only observed where the image gradients are nonzero. Therefore, one would not observe enough events on a smooth object surface. These two facts combined make traditional silhouettes non-ideal for events. To address these two shortcomings, we introduce Apparent Contour Events (ACE), a novel representation that encodes the object geometry while preserving the high temporal resolution of the events.

Event-based Visual Hull

Continuous Volume Carving provides smooth continuous incremental changes to the carving volume. This is accomplished by only carving updates through the use of ACEs. ACEs are defined by the tangent rays to the surface at any given positional location. The time resolution of event based cameras provide ACEs that are from continuous viewpoints through the trajectory of the camera.

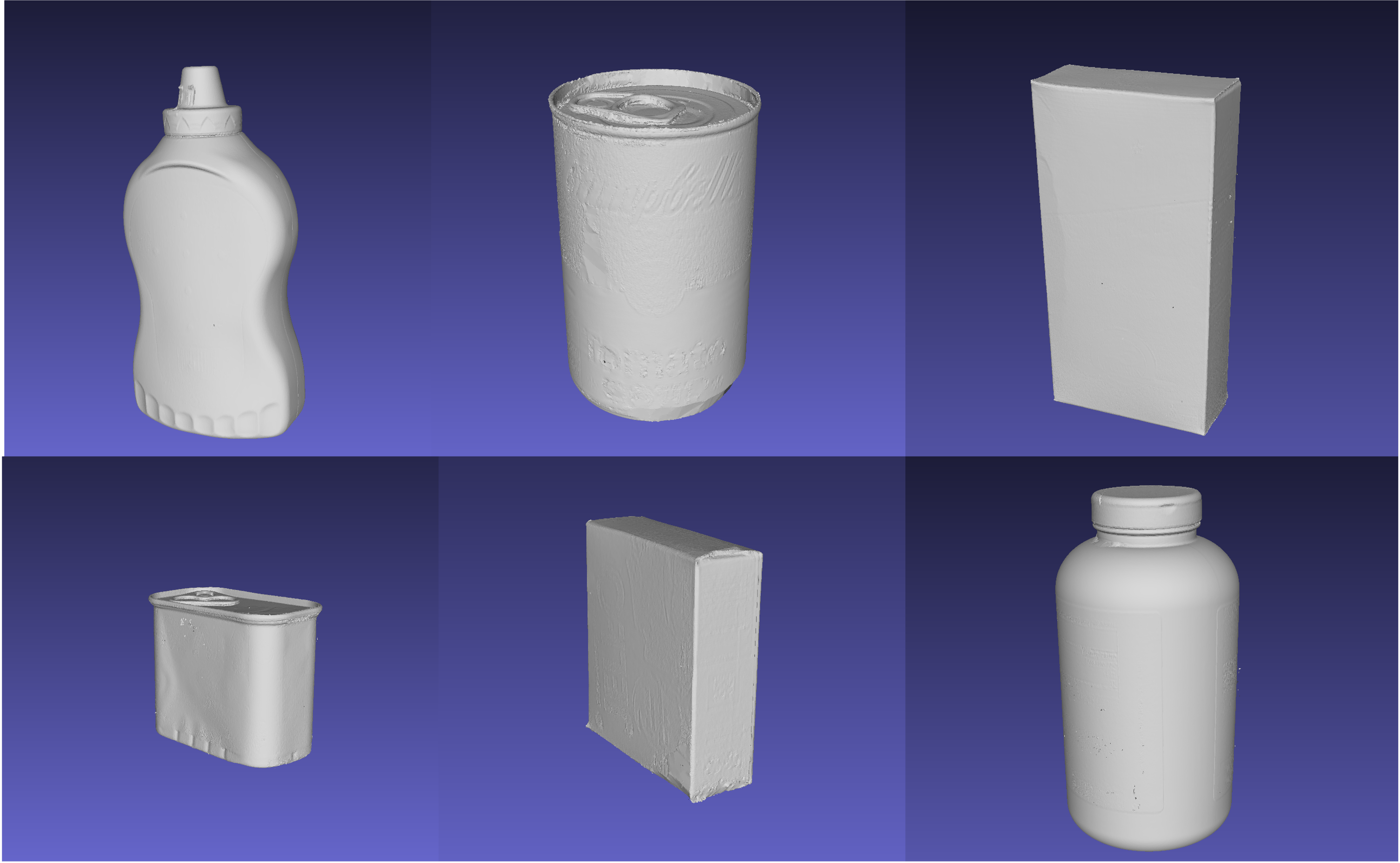

MOEC-3D Dataset

We collect Multi Object Event Camera Dataset in 3D (MOEC-3D), a 3D event dataset of real objects. Please refer to the Supplementary Material for details about the dataset. For ground truth models, an industrial-level Artec Spider scanner is used to provide the ground truth 3D models with high accuracy. The detailed steps of data collection can be found in the Supplementary Material. Here are some examples of the scanned objects:Citation

@inproceedings{Wang2022EvAC3D,

title={EvAC3D: From Event-based Apparent Contours

to 3D Models via Continuous Visual Hulls,

author={Wang, Ziyun and Chaney, Kenneth and Daniilidis, Kostas},

booktitle={European conference on computer vision},

year={2022}

}