This paper presents a simple approach to capturing the appearance

and structure of immersive scenes based on the imagery acquired

with an omnidirectional video camera. The scheme proceeds by

combining techniques from structure from motion with ideas from

image based rendering. An interactive photogrammetric modeling

scheme is used to recover the locations of a set of salient features

in the scene

(points and lines) from image measurements in a small set of

keyframe images. The estimates obtained from this process are

then used as a basis for estimating the position and orientation

of the camera at every frame in the video clip.

By augmenting the video sequence with pose information we provide the end user with the ability to index the video sequence spatially as opposed to temporally. This allows the user to explore the immersive scene by interactively selecting the desired viewpoint and viewing direction.

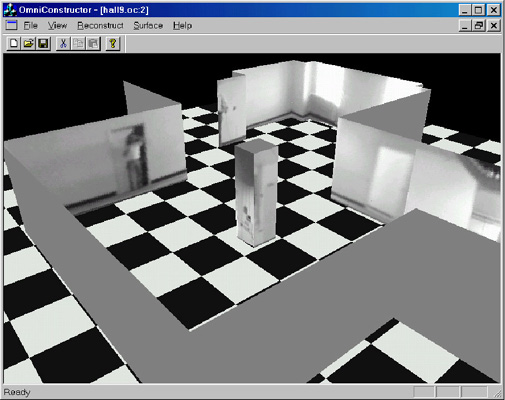

Sample Reconstructions

Furness Library

GRASP Laboratory

Fort Sam

|

|

|