Nested Words

aka Visibly Pushdown Languages

What are nested words?

Nested words is a model for

representation of data with both a

linear ordering and a hierarchically nested

matching of items. Examples of data with such dual linear-hierarchical structure

include executions of structured programs,

annotated linguistic data,

and HTML/XML documents.

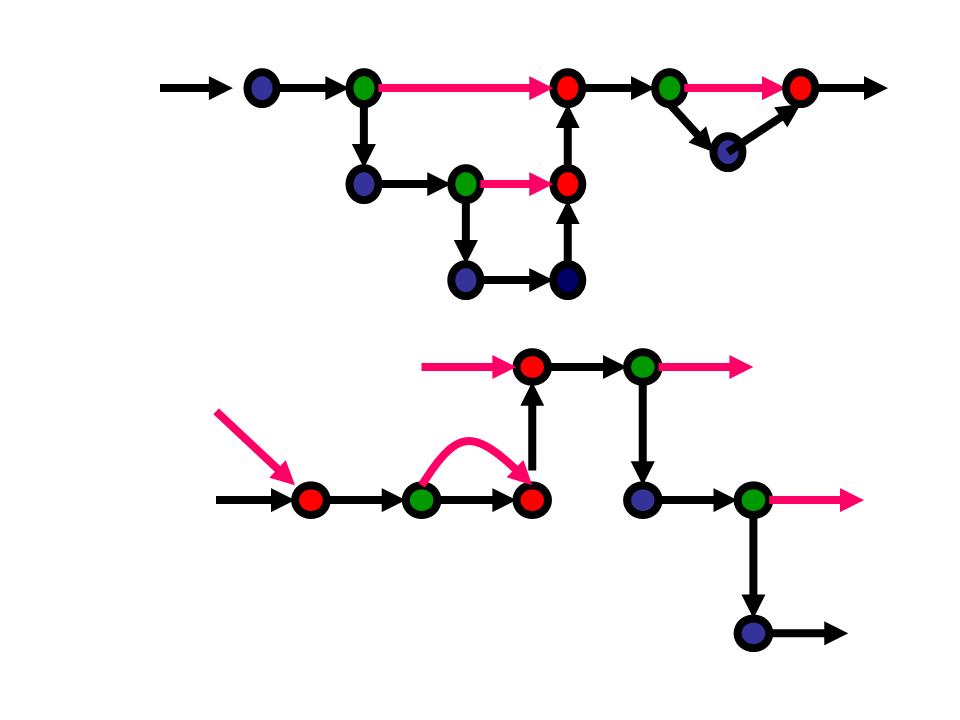

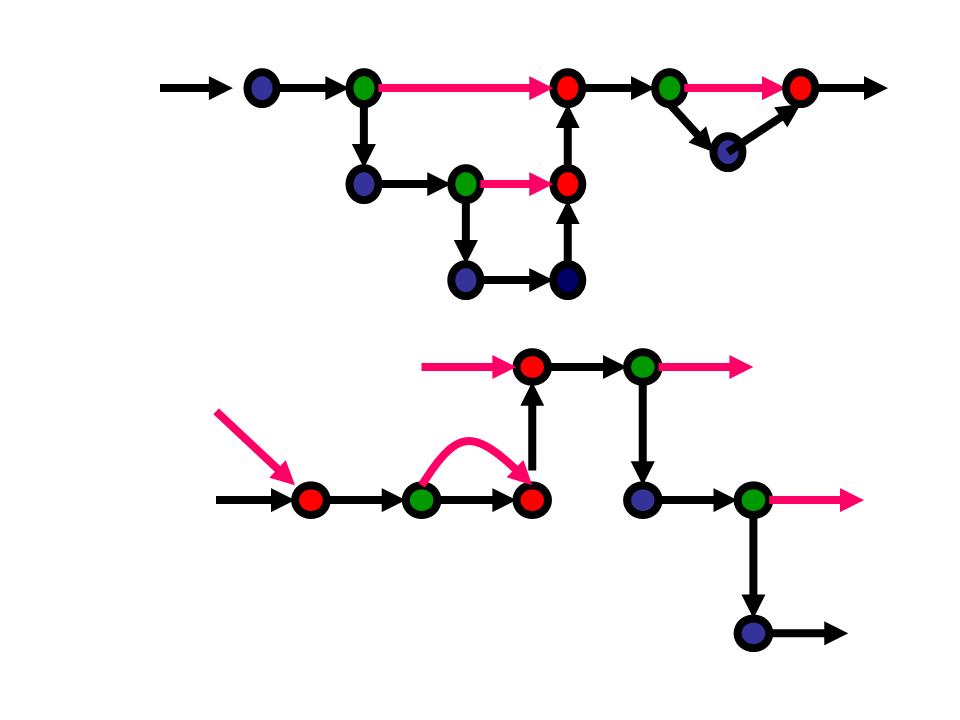

A nested word consists of a sequence of linearly ordered positions, augmented

with nesting edges connecting

calls to

returns (or

open-tags to

close-tags).

The edges do not cross creating a properly nested hierarchical structure,

and we allow some of the edges to be pending. This nesting structure can be uniquely

represented by a sequence specifying the types of positions (calls, returns,

and internals).

Nested words generalize both words and ordered trees, and

allow both word and tree operations.

Nested words is a model for

representation of data with both a

linear ordering and a hierarchically nested

matching of items. Examples of data with such dual linear-hierarchical structure

include executions of structured programs,

annotated linguistic data,

and HTML/XML documents.

A nested word consists of a sequence of linearly ordered positions, augmented

with nesting edges connecting

calls to

returns (or

open-tags to

close-tags).

The edges do not cross creating a properly nested hierarchical structure,

and we allow some of the edges to be pending. This nesting structure can be uniquely

represented by a sequence specifying the types of positions (calls, returns,

and internals).

Nested words generalize both words and ordered trees, and

allow both word and tree operations.

Nested word automata---finite-state acceptors for nested words,

define the class of regular languages of nested words.

This class has all the

appealing theoretical properties that the classical regular word

languages enjoys:

deterministic nested word automata are as expressive as their

nondeterministic counterparts; the class is closed under union, intersection,

complementation, concatenation, Kleene-*, prefixes, and language homomorphisms;

membership, emptiness, language

inclusion, and language equivalence are all decidable; and definability in

monadic second order logic corresponds exactly to finite-state recognizability.

These results generalize to infinite nested words also.

How do they relate to context-free languages of words?

Given a language L of nested words over an alphabet, the linear encoding

of nested words gives a language L' over the tagged alphabet consisting

of symbols tagged with the type of the position.

If L is regular language of nested words, then L' is context-free.

In fact, the pushdown automaton accepting L' has a special structure:

while reading a call, the automaton must push one symbol, while reading a

return symbol, it must pop one symbol (if the stack is non-empty), and while

reading an internal symbol, it can only update its control state.

We call such automata visibly pushdown automata and the

class of word languages they accept visibly pushdown languages (VPL).

Since our automata can be determinized,

VPLs correspond to a subclass of deterministic context-free languages (DCFL).

VPLs generalize of paranthesis languages, bracketed languages,

and balanced languages, and

they have better closure properties than

CFLs, DCFLs, or paranthesis languages.

We argue that for algorithmic verification of structured programs,

instead of viewing the program as a context-free language over words, one

should view it as a regular language of nested words (or equivalently,

a visibly pushdown language), and this would allow

model checking of many properties (such as stack inspection, pre-post

conditions) that are not expressible in existing specification logics.

In general, pushdown automata serve two distinct purposes:

discovering the hierarchical matching, and

processing/querying the matching.

In applications where only the second purpose is relevant

(as in program analysis), one can replace pushdown automata with NWAs

with many benefits.

How do they relate to ordered trees?

Data with dual linear-hierarchical structure is traditionally modeled

using binary, and more generally, using ordered unranked, trees,

and queried using tree automata.

In ordered trees, nodes with the same parent are linearly ordered,

and the classical tree traversals such as infix (or depth-first left-to-right)

can be used to define an implicit ordering of all nodes.

It turns out that,

hedges, where a hedge is a sequence of ordered trees,

are a special class of nested words, namely, the ones

corresponding to Dyck words, and

regular hedge languages correspond to balanced languages.

For document processing, nested words do have many advantages

over ordered trees.

Tree-based representation implicitly assumes that the input linear data

can be parsed into a tree, and thus, one cannot represent and process data that

may not parse correctly. Word operations such as prefixes, suffixes, and concatenation,

while natural for document processing, do not have analogous tree operations.

Second, tree automata can naturally express constraints on the sequence of labels

along a hierarchical path, and also along

the left-to-right siblings, but they have difficulty to capture constraints

that refer to the global linear order.

For example, the query that patterns p1,... pk appear in the document in that order

compiles into a deterministic word automaton (and hence

deterministic NWA) of linear size, but standard

deterministic bottom-up tree automaton for this query must be of size exponential in k.

NWAs can be viewed as a kind of tree automata

such that both bottom-up tree automata and top-down tree automata are

special cases.

These results imply that a query can be more succinctly

encoded in the nested words view with complexity benefits

A nested word automaton reads the word from left to right, processing

the nesting edges as and when they arrive. This matches with the SAX

API for XML, and thus has a natural use in streaming

algorithms.

References

The model of nested words went through a couple of iterations:

See Visibly pushdown languages; Alur and Madhusudan; STOC 2004;

and Adding nesting structure to words; Alur and Madhusudan; DLT 2006.

We recommend reading

this unified full version (Journal of the ACM, 2009).

The invited talk at CSR 2007 is also a good starting point.

The purpose of this page is to keep track of the extensive follow-up research on this topic.

Email me with comments and/or suggested additions.

Tools

Additional Decision Problems for VPAs/NWAs

- Visibly pushdown games; Loding, Madhusudan, and Serre; FSTTCS 2004.

- Visibly pushdown automata: From language equivalence to simulation and

bisimulation; Srba; CSL 2006.

- Regularity problems for visibly pushdown languages; Barany, Loding and Serre;

STACS 2006.

- On the membership problem for visibly pushdown languages; La Torre, Napoli,

and Parente; ATVA 2006.

- Symbolic visibly pushdown automata; D'Antoni and Alur; CAV 2014.

Congruences and Minimization

- Congruences for visibly pushdown languages;

Alur, Kumar, Madhusudan, and Viswanathan; ICALP 2005.

- Minimization, learning, and conformance testing of Boolean programs;

Kumar, Madhusudan, and Viswanathan; CONCUR 2006.

- Minimizing variants of visibly pushdown automata;

Chervet and Walukiewicz; MFCS 2007.

- Minimization of Visibly Pushdown Automata Using Partial Max-SAT; Heizmann, Schilling and Tischner; TACAS 2017.

- Minimization of visibly pushdown automata is NP-complete; Gauwin, Muscholl, and Raskin; LMCS 2020.

Temporal and Fixpoint Logics; Expressiveness

- A temporal logic of nested calls and returns;

Alur, Etessami, and Madhusudan; TACAS 2004.

- Regular languages of nested words: Fixed points, automata,

and synchronization; Arenas, Barcelo, and Libkin; ICALP 2007.

- First-order and temporal logics for nested words,

Alur, Arenas, Barcelo, Etessami, Immerman, and Libkin; LICS 2007.

- Alternating automata and a temporal fixpoint calculus for visibly pushdown languages;

Bozzelli; CONCUR 2007.

- A grammatical representation of visibly pushdown languages; Baran and Barringer;

WoLLIC 2007.

- Weighted logics for nested words and algebraic formal power series;

Matissen; ICALP 2008.

- Visibly rational expressions; Bozzelli and Sanchez; FSTTCS 2012.

- Visibly linear temporal logic; Bozzelli and Sanchez; IJCAR 2014.

Specifications for Program Analysis

- VPA-based aspects: Better support for AOP over protocols;

Nguyen and Sudholt; SEFM 2006.

- Instrumenting C programs with nested word monitors;

Chaudhuri and Alur; SPIN 2007.

- Synthesizing monitors for safety properties -- This time with calls and returns;

Rosu, Chen, and Ball; RV 2008.

- Temporal reasoning for procedural programs; Alur and Chaudhuri; VMCAI 2010.

- Nested interpolants; Heizmann, Hoenicke, and Podelski; POPL 2010.

- Checking compatibility of a producer and a consumer;

Drscoll, Burton, and Reps; FSE 2011.

- Secure programming via visibly pushdown safety games;

Harris, Jha, and Reps; CAV 2012.

XML Processing and Tree Automata

- Visibly pushdown expression effects for XML stream processing;

Pitcher; PLAN-X 2005.

- Visibly pushdown languages for streaming XML;

Kumar, Madhusudan, and Viswanathan; WWW 2007.

- Marrying words and trees; Alur; PODS 2007.

- Rewriting of visibly pushdown languages for XML data integration;

Thomo and Venkatesh; CIKM 2008.

- Streaming tree automata; Gauwin, Niehren, and Roos; Information Processing

Letters 2009.

- Earliest query answering for deterministic nested word automata;

Gauwin, Niehren, and Tison; FCT 2009.

- Query automata for nested words; Madhusudan and Viswanathan; MFCS 2009.

- From regular expressions to nested words: Unifying languages and query execution for relational and XML sequences;

Mozafari, Zeng, Zaniolo; VLDB 2010.

- High-performance complex event processing over XML streams;

Mozafari, Zeng, Zaniolo; SIGMOD 2012.

- Streamable fragments of forward XPath; Gauwin and Niehren; CIAA 2012.

- Early XPath node selection on XML streams; Debarbieux, Gauwin, Niehren, Sebastian, and Zergaoui; 2012.

Transducers

- Visibly pushdown transducers for approximate validation of streaming XML;

Thomo, Venkatesh, and Ye; FoIKS 2008.

- Visibly pushdown transducers;

Raskin and Servais; ICALP 2008.

- Equivalence of Deterministic Nested Word to Word Transducers; Staworko, Laurence, Lemay, Niehren;

FCT 2009.

-

Properties of Visibly Pushdown Transducers;

E. Filiot, J.-F. Raskin, P.-A. Reynier, F. Servais and J.-M. Talbot;

MFCS 2010.

- XEvolve: An XML Schema Evolution Framework;

F. Picalausa, F. Servais and E. Zimŕnyi;

SACSVT 2011.

- Streamability of Nested Word Transductions; E. Filiot, O. Gauwin, P.-A. Reynier, F. Servais. FSTTCS 2011.

- Streaming tree transducers;

R. Alur and L. D'Antoni; ICALP 2012.

- Visibly Pushdown Transducers with Look-Ahead. E. Filiot and F. Servais. SOFSEM 2012.

Nested Trees

- A fixpoint calculus for local and global program flows;

Alur, Chaudhuri, and Madhusudan; POPL 2006.

- Languages of nested trees; Alur, Chaudhuri, and Madhusudan; CAV 2006.

- Visibly pushdown languages and term rewriting; Chabin and Rety; FroCos 2007.

- Visibly Tree Automata with Memory and Constraints; Comon-Lundh, Jacquemard, Perrin;

Logical Methods in Computer Science 2008.

Words with Multiple Nestings

- A note on nested words; Blass and Gurevich; Microsoft Research TR; 2006.

- A robust class of context-sensitive languages; La Torre, Madhusudan, and Parlato;

LICS 2007.

- 2-Visibly Pushdown Automata; Carotenuto, Murano, and Peron; DLT 2007.

- Realizability of concurrent recursive programs;

Bollig, Grindei, and Habermehl; FoSSaCS 2009.

New Results Using Visibility of Calls/Returns

- Third-order Idealized Algol with iteration is decidable;

Murawski and Walukiewicz; FoSSaCS 2005.

- Synchronization of pushdown automata; Caucal; DLT 2006.

- Propositional dynamic logic with recursive programs; Loding and Serre; FoSSaCS 2006.

- Height-deterministic pushdown automata; Nowotka and Srba; MFCS 2007.

- An infinite automaton characterization of double exponential time;

La Torre, Madhusudan, and Parlato; CSL 2008.

A Challenging Open Problem (Now Solved!)

Consider the following decision problem: given two regular languages L1 and L2 of nested words,

does there exist a regular language R of words over the tagged alphabet such that

Intersection(R,L1) equals L2?

This is not known to be decidable, even for the special case that L1 is the set of all well-matched words.

The motivation is the following: in general, to check whether the input belongs to L2, the processing machine

needs a stack. But suppose we already have some additional knowledge about the input, that it belongs to the set L1

(for example, we may know that the input is well-matched), can this knowledge be used to

construct a DFA A such that for inputs in L1, A is able to decide membership in L2.

This problem is inspired by the paper "Validating streaming XML documents" by Segoufin and Vianu, PODS 2002,

which also presents a partial solution.

Recently, Eryk Kopczynski has proved undecidability of this problem:

see Invisible pushdown languages, LICS 2016.

View this

page in Romanian courtesy of azoft

Slovakian translation created by Sciologness Team

Czech translation created by Kate Bondareva

Russian translation created by Boostpack

Greek translation courtesy of Dimitris Galatas

Polish translation by Weronika Pawlak

Estonian translation by Karolin Lohmus

Maintained by Rajeev Alur

Nested words is a model for

representation of data with both a

linear ordering and a hierarchically nested

matching of items. Examples of data with such dual linear-hierarchical structure

include executions of structured programs,

annotated linguistic data,

and HTML/XML documents.

A nested word consists of a sequence of linearly ordered positions, augmented

with nesting edges connecting

calls to

returns (or

open-tags to

close-tags).

The edges do not cross creating a properly nested hierarchical structure,

and we allow some of the edges to be pending. This nesting structure can be uniquely

represented by a sequence specifying the types of positions (calls, returns,

and internals).

Nested words generalize both words and ordered trees, and

allow both word and tree operations.

Nested words is a model for

representation of data with both a

linear ordering and a hierarchically nested

matching of items. Examples of data with such dual linear-hierarchical structure

include executions of structured programs,

annotated linguistic data,

and HTML/XML documents.

A nested word consists of a sequence of linearly ordered positions, augmented

with nesting edges connecting

calls to

returns (or

open-tags to

close-tags).

The edges do not cross creating a properly nested hierarchical structure,

and we allow some of the edges to be pending. This nesting structure can be uniquely

represented by a sequence specifying the types of positions (calls, returns,

and internals).

Nested words generalize both words and ordered trees, and

allow both word and tree operations.